However, through a process of elimination, students may be able to determine the correct answer for each test question if their submitted answers are identified as incorrect. This strategy addresses the issue of students who take a test at the same time in order to share answers. Each time this occurs, a test will be made up of questions that are randomly selected and ordered.

Plagiarism (where students copy text from another text) has been a problem since the early days of the Internet. Online tools like Turnitin help professors to know if their students created the work themselves. We here at Winston AI also have a plagiarism tool to check if work was copied from somewhere online. does safeassign detect chatgpt, a tool Blackboard uses, has databases with billions of internet pages and millions of academic papers.

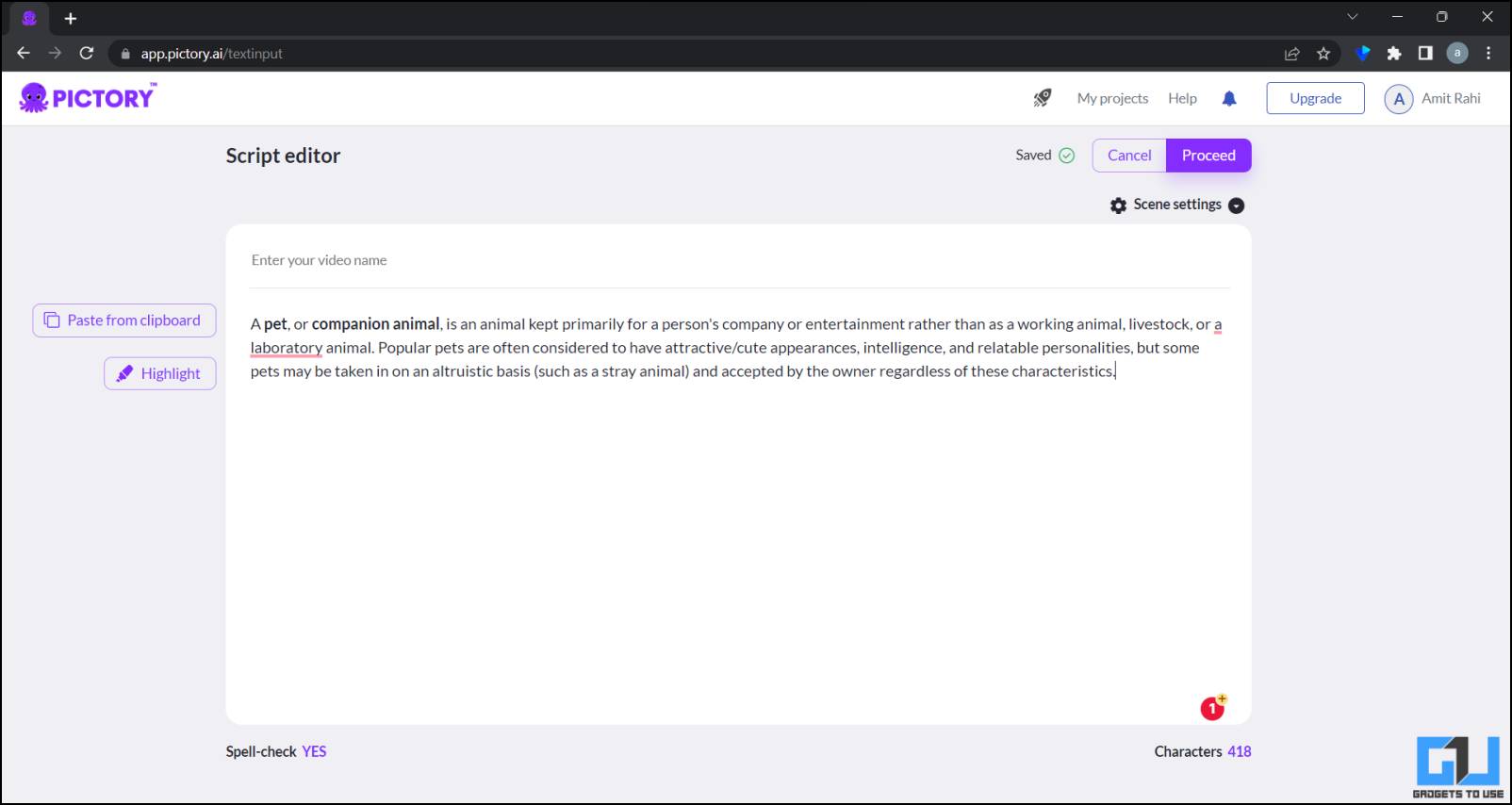

Plagiarism (where students copy text from another text) has been a problem since the early days of the Internet. Online tools like Turnitin help professors to know if their students created the work themselves. We here at Winston AI also have a plagiarism tool to check if work was copied from somewhere online. does safeassign detect chatgpt, a tool Blackboard uses, has databases with billions of internet pages and millions of academic papers.This report is essentially a detailed breakdown showing parts of the submission that match other sources and the percentage of the text that's similar. It's a valuable resource for instructors to review and assess the originality of the work submitted. When a student submits an assignment on Blackboard, SafeAssign jumps into action. It uses complex algorithms to scrutinize the text, looking for any similarities with existing content. This includes not just direct quotes or paraphrased material but also content that might have been generated by AI, like Chat GPT.

No, it is a widely used plagiarism checker, isn’t equipped to reliably detect content generated by ChatGPT. This is because does safeassign detect quillbot primarily focuses on identifying copied text against its vast database of sources. In the quest to understand SafeAssign’s prowess in detecting AI-generated content, particularly from sources like ChatGPT, we venture into a fascinating intersection of technology and educational ethics. SafeAssign, a tool revered in academic circles, primarily operates by comparing submitted texts against a vast database of previous submissions, websites, and academic papers. The core of its functionality lies in identifying matched phrases and evaluating the originality of a student’s work.

SafeAssign is a plagiarism detection tool commonly used in educational institutions to identify academic dishonesty. Blackboard cannot detect or identify ChatGPT because it is not designed to recognize the usage of AI, language models, or AI chatbots. This allows instructors to manage course content, exams, and communications online using Blackboard, which is primarily a learning management system. SafeAssign, like other platforms, relies on language processing algorithms to compare student submissions against stored databases.

It's up to every teacher, school, editor, and institution to decide exactly where that line is drawn. When I first looked at whether it's possible to fight back against AI-generated plagiarism, and how that might work, it was January 2023, just a few months into the world's exploding awareness of generative AI. More than a year later, it feels like we've been exploring generative AI for years, but we've only looked at the issue for about 18 months.